on Aug 13

Support All Flux Models for Ablative Experiments

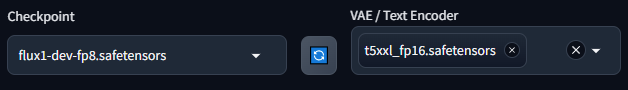

Download base model and vae (raw float16) from Flux official here and here.

Download clip-l and t5-xxl from here or our mirror

Put base model in models\Stable-diffusion.

Put vae in models\VAE

Put clip-l and t5 in models\text_encoder

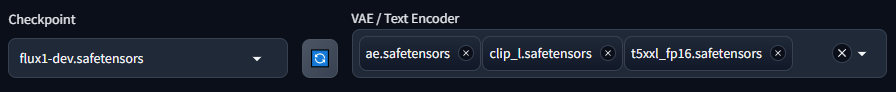

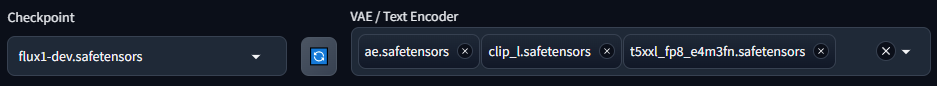

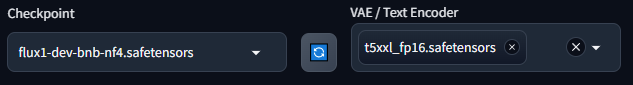

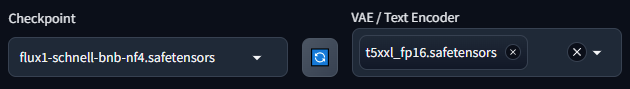

Possible options

You can load in nearly arbitrary combinations

etc ...

Fun fact

Now you can even load clip-l for sd1.5 separately

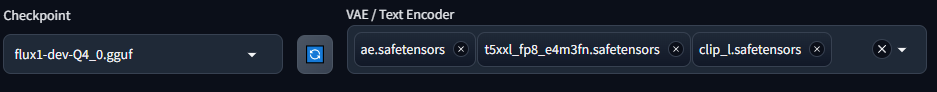

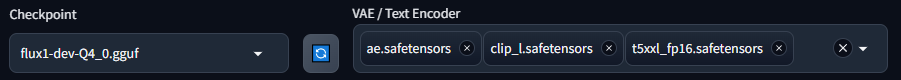

GGUF

Download vae (raw float16, 'ae.safetensors' ) from Flux official here or here.

Download clip-l and t5-xxl from here or our mirror

Download GGUF models here or here.

Put base model in models\Stable-diffusion.

Put vae in models\VAE

Put clip-l and t5 in models\text_encoder

Below are some comments copied from elsewhere

Also people need to notice that GGUF is a pure compression tech, which means it is smaller but also slower because it has extra steps to decompress tensors and computation is still pytorch. (unless someone is crazy enough to port llama.cpp compilers) (UPDATE Aug 24: Someone did it!! Congratulations to leejet for porting it to stable-diffusion.cpp here. Now people need to take a look at more possibilities for a cpp backend...)

BNB (NF4) is computational acceleration library to make things faster by replacing pytorch ops with native low-bit cuda kernels, so that computation is faster.

NF4 and Q4_0 should be very similar, with the difference that Q4_0 has smaller chunk size and NF4 has more gaussian-distributed quants. I do not recommend to trust comparisons of one or two images. And, I also want to have smaller chunk size in NF4 but it seems that bnb hard coded some thread numbers and changing that is non trivial.

However Q4_1 and Q4_K are technically granted to be more precise than NF4, but with even more computation overheads – and such overheads may be more costly than simply moving higher precision weight from CPU to GPU. If that happens then the quant lose the point.

And Q8 is always more precise than FP8 ( and a bit slower than fp8

Precision: fp16 >> Q8 > Q4

Precision For Q8: Q8_K (not available) >Q8_1 (not available) > Q8_0 >> fp8

Precision For Q4: Q4K_S >> Q4_1 > Q4_0

Precision NF4: between Q4_1 and Q4_0, may be slightly better or worse since they are in different metric system

Speed (if not offload, e.g., 80GB VRAM H100) from fast to slow: fp16 ≈ NF4 > fp8 >> Q8 > Q4_0 >> Q4_1 > Q4K_S > others

Speed (if offload, e.g., 8GB VRAM) from fast to slow: NF4 > Q4_0 > Q4_1 ≈ fp8 > Q4K_S > Q8_0 > Q8_1 > others ≈ fp16