Review

Hello everyone; I want to share some of my impressions about the Chinese model, Hunyuan-DiT from tencent. First of all let’s start with some mandatory data to know so we (westerns) can figure out what is meant for:

Hunyuan-DiT works well as multi-modal dialogue with users (mainly Chinese and English language), the better explained your prompt the better your generation will be, is not necessary to introduce only keywords, despite it understands them quite well. In terms of rating HYDiT 1.2 is located between SDXL and SD3; is not as powerful than SD3, defeats SDXL almost in everything; for me is how SDXL should’ve be in first place; one of the best parts is that Hunyuan-DiT is compatible with almost all SDXL node suit.

Hunyuan-DiT-v1.2, was trained with 1.5B parameters.

mT5, was trained with 1.6B parameters.

Recommeded VAE: sdxl-vae-fp16-fix

Recommended Sampler: ddpm, ddim, or dpmms

Prompt as you’d like to do in SD1.5, don’t be shy and go further in term of length; HunyuanDiT combines two text encoders, a bilingual CLIP and a multilingual T5 encoder to improve language understanding and increase the context length; they divide your prompt on meaningful IDs and then process your entire prompt, their limit is 100 IDs or to 256 tokens. T5 works well on a variety of tasks out-of-the-box by prepending a different prefix to the input corresponding to each task.

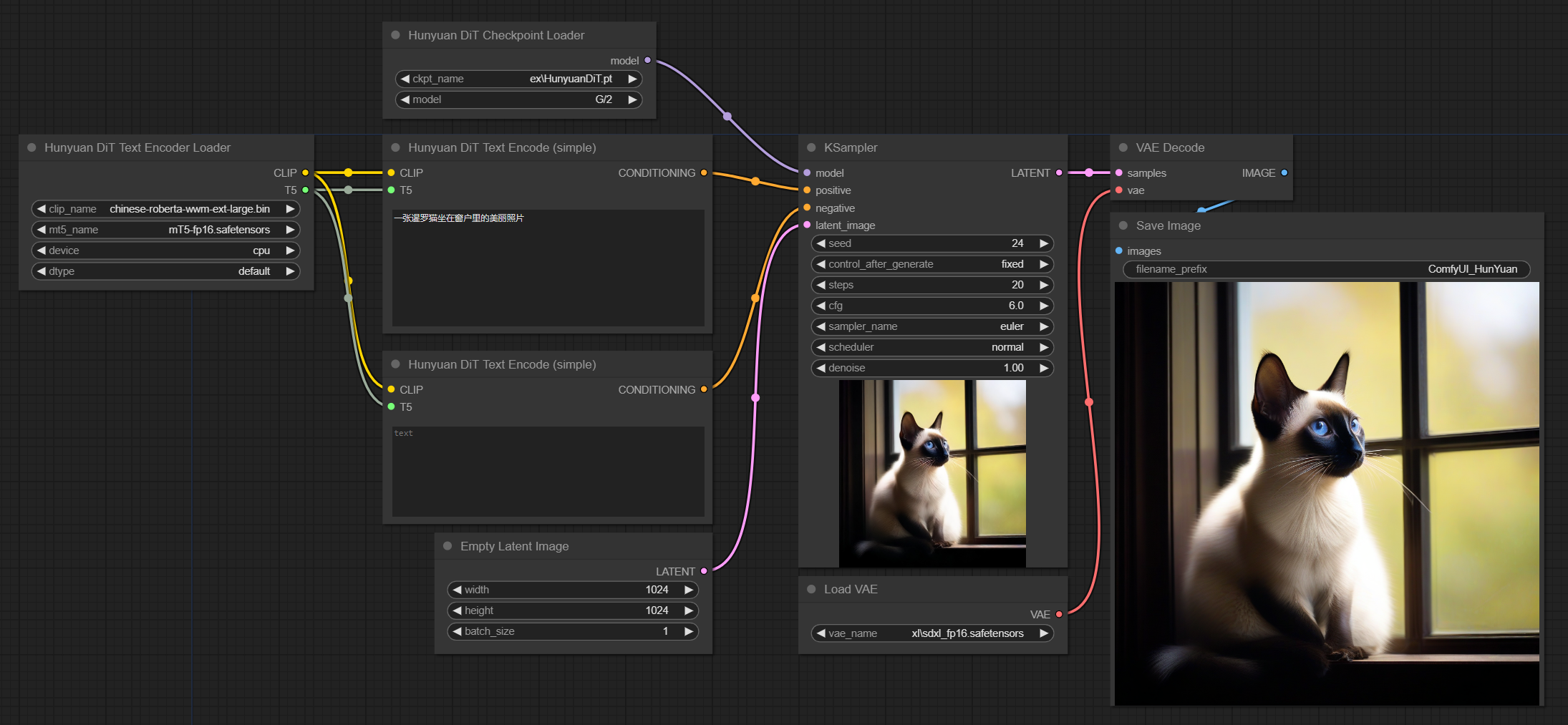

To improve your prompt, place your resumed prompt in the CLIP:TextEncoder node box (if you disabled t5), or place your extended prompt in the T5:TextEncoder node box (if you enabled t5).

You can use the "simple" text encode node to only use one prompt, or you can use the regular one to pass different text to CLIP/T5.

The worst is the model only benefits from moderated (high for TensorArt) step values: 40 steps are the basis in most cases.

Comfyui (Comfyflow) (Example)

TensorArt added all the elements to build a good flow for us; you should try it too.

Additional

References

Analysis of HunYuan-DiT | https://arxiv.org/html/2405.08748v1

Learn more of T5 | https://huggingface.co/docs/transformers/en/model_doc/t5

How CLIP and T5 work together | https://arxiv.org/pdf/2205.11487